In December 2021 McKinsey published a report entitled “The State of AI in 2021”. This article is part of my subjective analysis of this publication. You can find the key conclusions at this link - I will also provide links to other parts of the analysis.

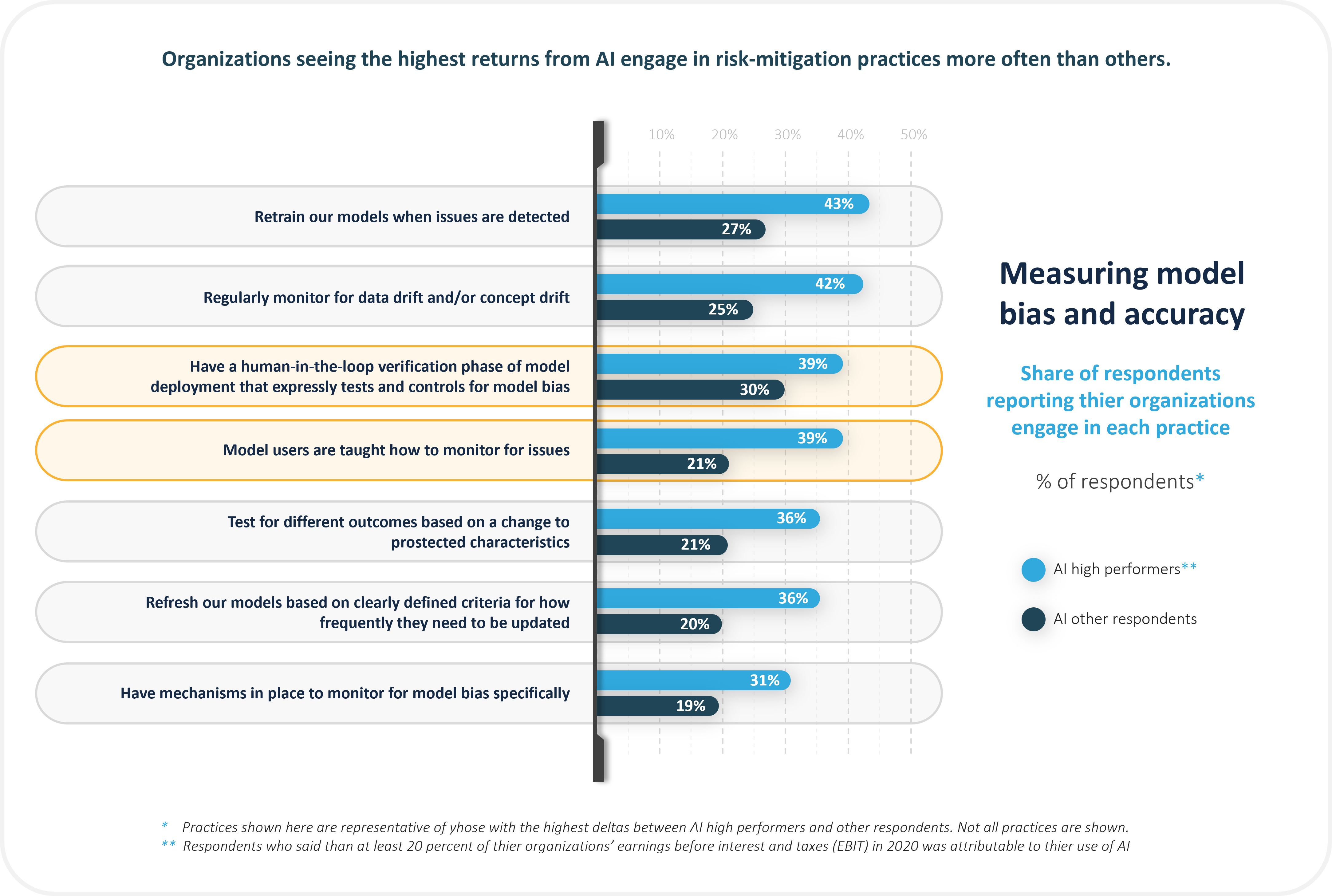

In the section on measuring the limitations of the AI model and its effectiveness, McKinsey highlights some of the best practices utilized by the most successful organizations which use AI.

Among these is the pre-planned re-training of the model when problems are encountered. 43% of the “high performers” and 27% of the remaining respondents respectively claim to do so. In addition, regular data monitoring or refreshing of AI models, as mentioned in earlier articles, is also among the list of good practices.

Of particular interest in this set of good practices is the inclusion of end-user processes or human control in general in the quality verification process of an Artificial Intelligence model. Someone has to resolve AI concerns and make decisions that translate into more patterns for network algorithms. In this case, the difference in the application of these good practices between the “high performers” (39%) and the rest (30%) is significant.

Involving end-users in monitoring and reporting exceptions is almost twice as common among “high performers”, 39% of whom do so, compared to 21% among the remainder.

Based on McKinsey report "The state of AI in 2021".

These two aspects especially have captured my attention, because in our experience, including in the case of Image Recognition, it is important to bear these factors in mind.

We recommend considering both levels of control:

- Internal, i.e. people or machines that verify the quality of the model’s performance at the AI provider, which should take place on a daily basis. This team is very often the impetus that triggers the need to retrain the model, to enrich it either with new products or those that already exist but have a changed layout or dimensions. An additional benefit for the customer is that it relieves its employees of the burden of acquiring new data from other departments or from the market.

- External - from users themselves. There is nothing more valuable than a huge population of users who indicate that at some point, in some product, a model did not work properly. We are fortunate to work with users who, by definition, care deeply about the quality of the AI model, as the results often have a direct impact on their remuneration. For example, Sales Representatives, Merchandisers or Shop Staff are remunerated for maintaining an appropriate level of product availability. Moreover, the opportunity to make a complaint (and/or correct the model) is also a good outlet for any potential emotions that may naturally arise when something "doesn't work". It is important that the application itself makes it possible to save information about what has been corrected by the user and when, so that later it can be used during the next stage of AI training. As good practice, we recommend that these corrections are additionally verified by the in-house team to avoid introducing erroneous data into the AI model.

Having the above-mentioned mechanisms to control the operation of AI not only ensures the safety of the project, but also supports the development of AI itself. Thanks to these mechanisms, AI can constantly develop and evolve according to changes in the market. I would like to emphasize that this is particularly relevant for AI that supports Image Recognition solutions.

To sum up, all parts, here are my 4 biggest surprises after reading the McKinsey report:

- Lots of companies still do not realize that maintaining a network is as important as building it.

- A direct link between the sophistication of the use of AI and the perception of increased savings/profits associated with its use.

- The extent to which experience with AI affects the ability to estimate the cost of its production and maintenance, and thus a greater ability to accurately calculate ROI. This, in turn, influences the decision to deploy AI to perform specific functions within the organization, as in most cases an accurately calculated ROI indicates that it is worth investing in AI.

The report makes it clear that it is important to include users throughout the process of creating and implementing AI. The report strongly emphasizes the importance of both internal control and user feedback. At the same time, many companies we come into contact with, still underestimate this element, treating AI as a one-off, production “magic box” that will always work. The practice of working closely and continuously with AI users is something we have been recommending to our clients for years, and I am pleased that this survey confirms the validity of this statement.

👉 Follow us on social media to stay up to date: